-

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

Transfer learning from a Nvida's Pre-trained StyleGAN (FFHQ) #279

Comments

|

Hi, I have faced the same problem and found out that the default configuration on the model is different from the pre-trained one. SolutionChange the configuration from

ExplanationThe configuration controls the model's channel_base, the number of the mapping network's layers, and the minibatch standard deviation layer of the discriminator. For instance, the mapping network has 8 layers with To keep the model structure the same as the pre-trained one, you should ensure that stylegan2-ada-pytorch/train.py Lines 154 to 161 in 6f160b3

stylegan2-ada-pytorch/train.py Lines 176 to 183 in 6f160b3

In addition, when loading the pre-trained model, the function stylegan2-ada-pytorch/torch_utils/misc.py Lines 153 to 160 in 6f160b3

|

@JackywithaWhiteDog Hi. Where did this weight come from? I only get 256*256 pretrained weight in transfer-lerarning folder. The website is different from the one in this issue. |

|

Hi @githuboflk, sorry that I didn't notice your question. I also used the pre-trained weight provided in README as you mentioned. However, I think the checkpoint in this issue is available at NVIDIA NGC Catalog. |

Hi,

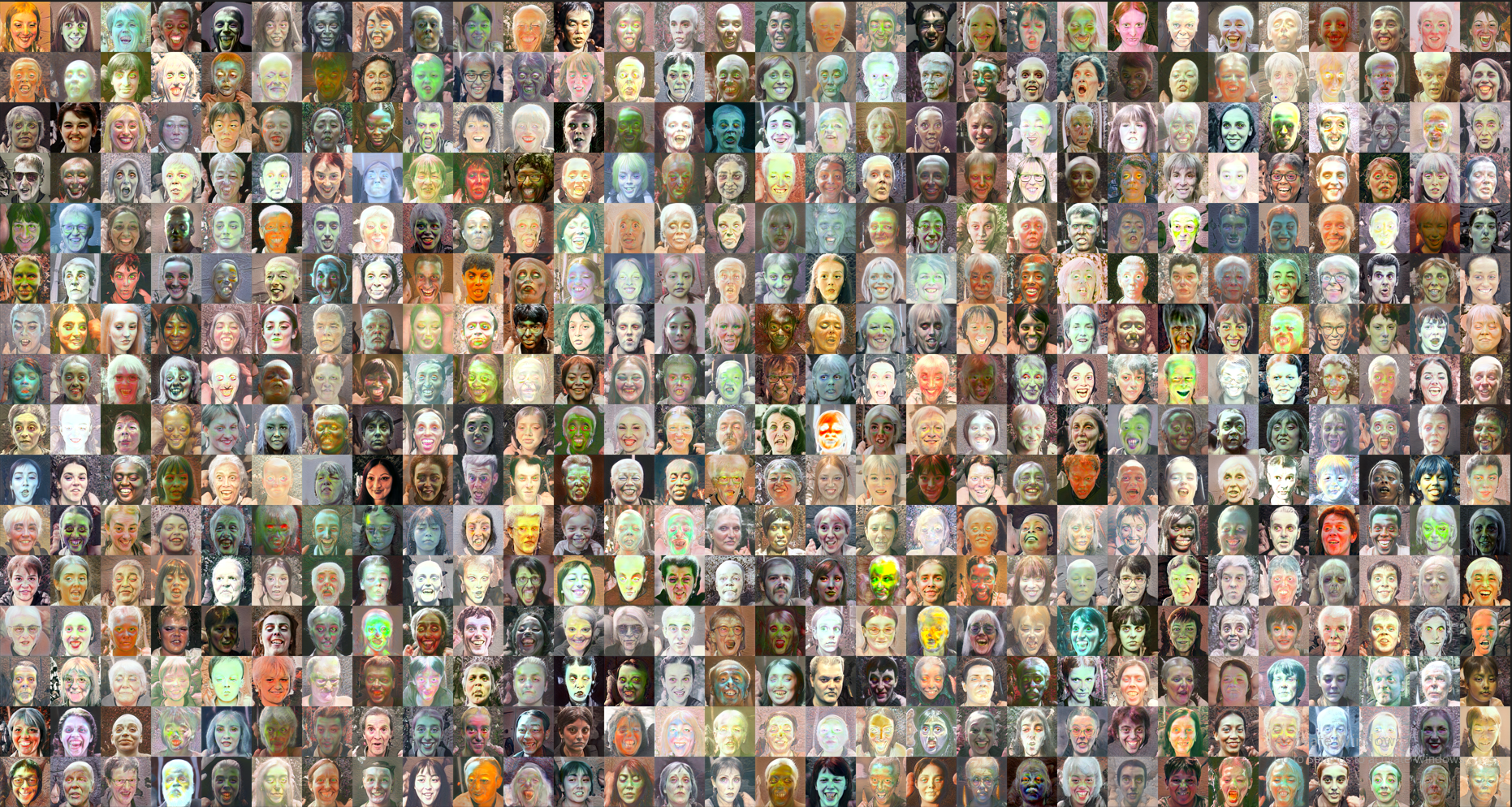

Utilize the pre-trained pkl file: https://api.ngc.nvidia.com/v2/models/nvidia/research/stylegan2/versions/1/files/stylegan2-ffhq-256x256.pkl. I've attempted to transfer learning (without augmentation) from (FFHQ->CelebA-HQ).

However, when looking a the init generated images, I see this:

but when checking the FID against the FFHQ dataset FID=~9.

Can anyone explain what is going?

The text was updated successfully, but these errors were encountered: