|

30 | 30 | "\n", |

31 | 31 | "## Who uses PyTorch?\n", |

32 | 32 | "\n", |

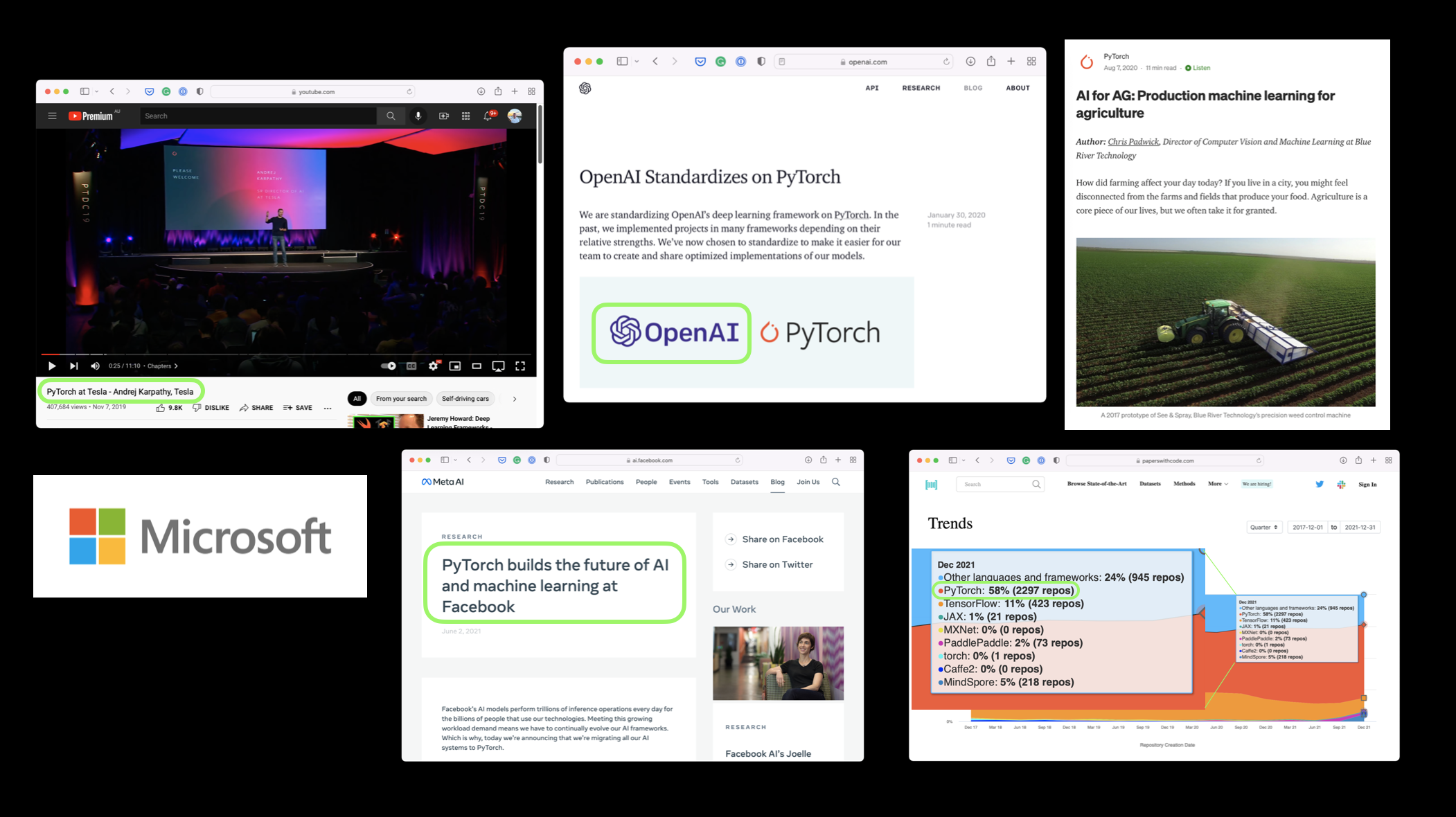

33 | | - "Many of the worlds largest technology companies such as [Meta (Facebook)](https://ai.facebook.com/blog/pytorch-builds-the-future-of-ai-and-machine-learning-at-facebook/), Tesla and Microsoft as well as artificial intelligence research companies such as [OpenAI use PyTorch](https://openai.com/blog/openai-pytorch/) to power research and bring machine learning to their products.\n", |

| 33 | + "Many of the world's largest technology companies such as [Meta (Facebook)](https://ai.facebook.com/blog/pytorch-builds-the-future-of-ai-and-machine-learning-at-facebook/), Tesla and Microsoft as well as artificial intelligence research companies such as [OpenAI use PyTorch](https://openai.com/blog/openai-pytorch/) to power research and bring machine learning to their products.\n", |

34 | 34 | "\n", |

35 | 35 | "\n", |

36 | 36 | "\n", |

37 | | - "For example, Andrej Karpathy (head of AI at Tesla) has given several talks ([PyTorch DevCon 2019](https://youtu.be/oBklltKXtDE), [Tesla AI Day 2021](https://youtu.be/j0z4FweCy4M?t=2904)) about how Tesla use PyTorch to power their self-driving computer vision models.\n", |

| 37 | + "For example, Andrej Karpathy (head of AI at Tesla) has given several talks ([PyTorch DevCon 2019](https://youtu.be/oBklltKXtDE), [Tesla AI Day 2021](https://youtu.be/j0z4FweCy4M?t=2904)) about how Tesla uses PyTorch to power their self-driving computer vision models.\n", |

38 | 38 | "\n", |

39 | 39 | "PyTorch is also used in other industries such as agriculture to [power computer vision on tractors](https://medium.com/pytorch/ai-for-ag-production-machine-learning-for-agriculture-e8cfdb9849a1).\n", |

40 | 40 | "\n", |

|

66 | 66 | "| **Creating tensors** | Tensors can represent almost any kind of data (images, words, tables of numbers). |\n", |

67 | 67 | "| **Getting information from tensors** | If you can put information into a tensor, you'll want to get it out too. |\n", |

68 | 68 | "| **Manipulating tensors** | Machine learning algorithms (like neural networks) involve manipulating tensors in many different ways such as adding, multiplying, combining. | \n", |

69 | | - "| **Dealing with tensor shapes** | One of the most common issues in machine learning is dealing with shape mismatches (trying to mixed wrong shaped tensors with other tensors). |\n", |

| 69 | + "| **Dealing with tensor shapes** | One of the most common issues in machine learning is dealing with shape mismatches (trying to mix wrong shaped tensors with other tensors). |\n", |

70 | 70 | "| **Indexing on tensors** | If you've indexed on a Python list or NumPy array, it's very similar with tensors, except they can have far more dimensions. |\n", |

71 | 71 | "| **Mixing PyTorch tensors and NumPy** | PyTorch plays with tensors ([`torch.Tensor`](https://pytorch.org/docs/stable/tensors.html)), NumPy likes arrays ([`np.ndarray`](https://numpy.org/doc/stable/reference/generated/numpy.ndarray.html)) sometimes you'll want to mix and match these. | \n", |

72 | 72 | "| **Reproducibility** | Machine learning is very experimental and since it uses a lot of *randomness* to work, sometimes you'll want that *randomness* to not be so random. |\n", |

|

501 | 501 | "id": "LhXXgq-dTGe3" |

502 | 502 | }, |

503 | 503 | "source": [ |

504 | | - "`MATRIX` has two dimensions (did you count the number of square brakcets on the outside of one side?).\n", |

| 504 | + "`MATRIX` has two dimensions (did you count the number of square brackets on the outside of one side?).\n", |

505 | 505 | "\n", |

506 | 506 | "What `shape` do you think it will have?" |

507 | 507 | ] |

|

697 | 697 | "\n", |

698 | 698 | "And machine learning models such as neural networks manipulate and seek patterns within tensors.\n", |

699 | 699 | "\n", |

700 | | - "But when building machine learning models with PyTorch, it's rare you'll create tensors by hand (like what we've being doing).\n", |

| 700 | + "But when building machine learning models with PyTorch, it's rare you'll create tensors by hand (like what we've been doing).\n", |

701 | 701 | "\n", |

702 | 702 | "Instead, a machine learning model often starts out with large random tensors of numbers and adjusts these random numbers as it works through data to better represent it.\n", |

703 | 703 | "\n", |

|

984 | 984 | "\n", |

985 | 985 | "Some are specific for CPU and some are better for GPU.\n", |

986 | 986 | "\n", |

987 | | - "Getting to know which is which can take some time.\n", |

| 987 | + "Getting to know which one can take some time.\n", |

988 | 988 | "\n", |

989 | 989 | "Generally if you see `torch.cuda` anywhere, the tensor is being used for GPU (since Nvidia GPUs use a computing toolkit called CUDA).\n", |

990 | 990 | "\n", |

|

1901 | 1901 | "id": "bXKozI4T0hFi" |

1902 | 1902 | }, |

1903 | 1903 | "source": [ |

1904 | | - "Without the transpose, the rules of matrix mulitplication aren't fulfilled and we get an error like above.\n", |

| 1904 | + "Without the transpose, the rules of matrix multiplication aren't fulfilled and we get an error like above.\n", |

1905 | 1905 | "\n", |

1906 | 1906 | "How about a visual? \n", |

1907 | 1907 | "\n", |

|

1988 | 1988 | "id": "zIGrP5j1pN7j" |

1989 | 1989 | }, |

1990 | 1990 | "source": [ |

1991 | | - "> **Question:** What happens if you change `in_features` from 2 to 3 above? Does it error? How could you change the shape of the input (`x`) to accomodate to the error? Hint: what did we have to do to `tensor_B` above?" |

| 1991 | + "> **Question:** What happens if you change `in_features` from 2 to 3 above? Does it error? How could you change the shape of the input (`x`) to accommodate to the error? Hint: what did we have to do to `tensor_B` above?" |

1992 | 1992 | ] |

1993 | 1993 | }, |

1994 | 1994 | { |

|

2188 | 2188 | "\n", |

2189 | 2189 | "You can change the datatypes of tensors using [`torch.Tensor.type(dtype=None)`](https://pytorch.org/docs/stable/generated/torch.Tensor.type.html) where the `dtype` parameter is the datatype you'd like to use.\n", |

2190 | 2190 | "\n", |

2191 | | - "First we'll create a tensor and check it's datatype (the default is `torch.float32`)." |

| 2191 | + "First we'll create a tensor and check its datatype (the default is `torch.float32`)." |

2192 | 2192 | ] |

2193 | 2193 | }, |

2194 | 2194 | { |

|

2289 | 2289 | } |

2290 | 2290 | ], |

2291 | 2291 | "source": [ |

2292 | | - "# Create a int8 tensor\n", |

| 2292 | + "# Create an int8 tensor\n", |

2293 | 2293 | "tensor_int8 = tensor.type(torch.int8)\n", |

2294 | 2294 | "tensor_int8" |

2295 | 2295 | ] |

|

3139 | 3139 | "source": [ |

3140 | 3140 | "Just as you might've expected, the tensors come out with different values.\n", |

3141 | 3141 | "\n", |

3142 | | - "But what if you wanted to created two random tensors with the *same* values.\n", |

| 3142 | + "But what if you wanted to create two random tensors with the *same* values.\n", |

3143 | 3143 | "\n", |

3144 | 3144 | "As in, the tensors would still contain random values but they would be of the same flavour.\n", |

3145 | 3145 | "\n", |

|

3220 | 3220 | "It looks like setting the seed worked. \n", |

3221 | 3221 | "\n", |

3222 | 3222 | "> **Resource:** What we've just covered only scratches the surface of reproducibility in PyTorch. For more, on reproducibility in general and random seeds, I'd checkout:\n", |

3223 | | - "> * [The PyTorch reproducibility documentation](https://pytorch.org/docs/stable/notes/randomness.html) (a good exericse would be to read through this for 10-minutes and even if you don't understand it now, being aware of it is important).\n", |

| 3223 | + "> * [The PyTorch reproducibility documentation](https://pytorch.org/docs/stable/notes/randomness.html) (a good exercise would be to read through this for 10-minutes and even if you don't understand it now, being aware of it is important).\n", |

3224 | 3224 | "> * [The Wikipedia random seed page](https://en.wikipedia.org/wiki/Random_seed) (this'll give a good overview of random seeds and pseudorandomness in general)." |

3225 | 3225 | ] |

3226 | 3226 | }, |

|

0 commit comments