| name | major | GDSC | |

|---|---|---|---|

| HUICHAN SEO | Computer Science | LEAD | [email protected] |

| HYEONJUNG HWANG | Computer Science | General | [email protected] |

| SEONHO LEE | Multi Media Engineering | Core | [email protected] |

| EUNSEO LIM | Imformation Comunication Engineering | General | [email protected] |

Our solution aims to provide hearing-impaired individuals with equal opportunities to express their thoughts freely through a pronunciation correction process. This process converts pronunciation into text for visual feedback, uses AI to detail areas needing improvement, and transforms the user's pronunciation into vibrations to provide tactile feedback. With our pronunciation correction process, we strive to eliminate the fear of speaking among the hearing-impaired and create a world where they can express their thoughts freely.

Our approach aligns with promoting inclusive education (SDG 4) and reducing inequalities (SDG 10). We aim to contribute to a more inclusive and equitable world where everyone can freely express their thoughts, enjoy quality education, and experience reduced inequalities.

| Home Screen | Learning Screen | Phoneme Screen | Word Screen |

|---|---|---|---|

|

|

|

|

Home Screen : Pronunciation score and daily learning graphs

Learning Screen: Learning logs by date

Phoneme Screen : Visuals and guides for phoneme articulation

Word Screen : Features sound-to-vibration buttons , GIFs for pronunciation practice, interactive phoneme guides with Google's Gemini, detailed phoneme explanations, and voice recording with result review

| Sentance Screen(fix) | Paragraph Screen | Script Screen | live Screen(fix) |

|---|---|---|---|

|

|

|

|

Sentence Screen: Same layout as the Word Screen.

Paragraph Screen: Allows users to record their voice for a given script and receive feedback.

Script Screen: Users can write their own scripts and record their voice for feedback.

Live Screen: Displays the user's pronunciation in real time.

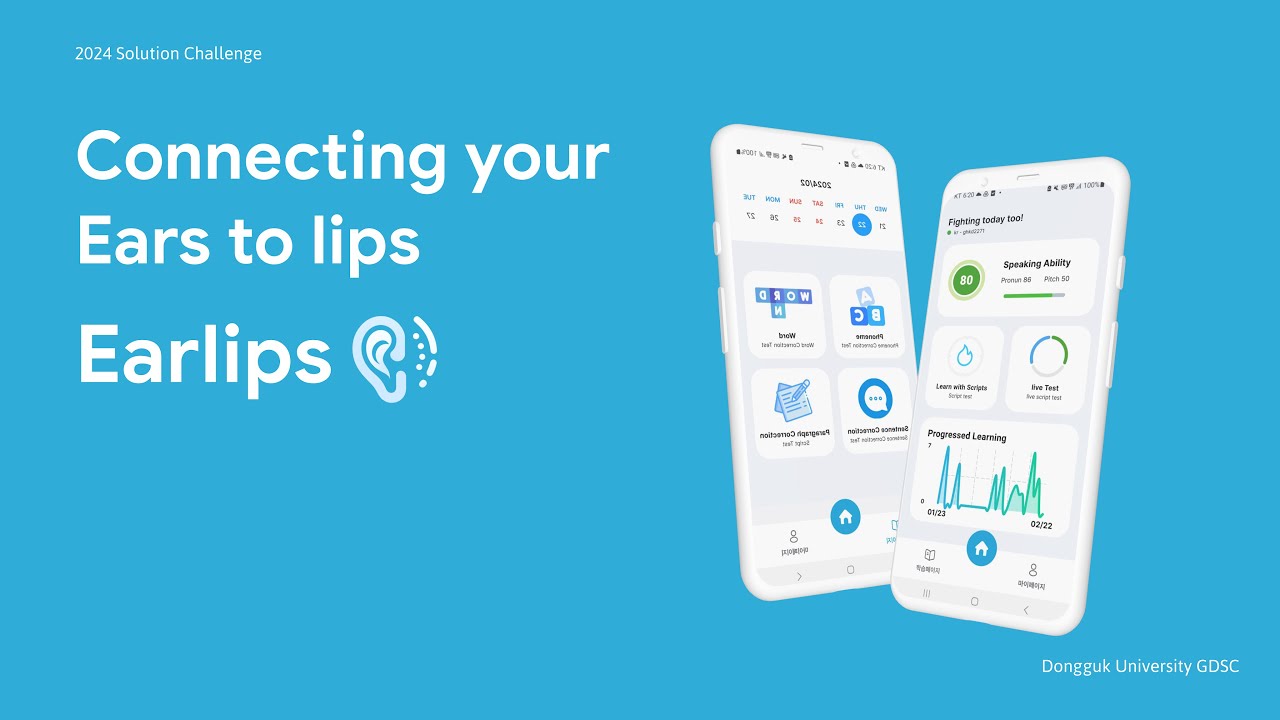

The name is earlips.

The mission of the Solution Challenge is to solve for one or more of the United Nations' 17 Sustainable Development Goals using Google technology. We provide pronunciation and speech learning services for individuals with hearing impairments.

- Create a Virtual Machine instance with one GPU T4 and 2 cores, equipped with 15GB of memory. In my case, I used Google Cloud Platform.

- Machine Type: n1-standard-4

- GPU: 1 x NVIDIA Tesla T4

- Cores: 2

- Memory: 15GB

- Operating System: Deep Learning on Linux

- OS version: Deep Learning VM with CUDA 11.8 M116 : Debian 11, Python 3.10. With CUDA 11.8 preinstalled.

- Set up a git file upload server on the created Virtual Machine instance.

# Example command for setting up git file upload server

git init --bare my-repo.git- In the server console, run the following command to install the necessary requirements:

pip install -r requirements.txt- In my case, I installed only "my_install_package.txt"

- Make sure to replace x.x.x.x with the desired host IP address and x with the preferred port number.

- Execute the following command to run the server:

uvicorn server:app --reload --host=x.x.x.x --port=x- If you encounter an FFmpeg error, resolve it by running the following command in the server console:

conda install ffmpeg- AI : openai/whisper-large-v3 (Hugging Face)

- AI : Gemini - We implemented a feature that uses Gemini to explain phonetic symbols in text