This repo implements a few algorithms that are made to synchronize changes to a SQL database table to an external destination as described in this blog post.

This is interesting because you might want to monitor your users table for changes and do something as they happen. For example, update them in your data warehouse or Mailchimp.

If you don't want to worry about these kinds of details and just make those use cases happen in a much more fully-featured way, check out Grouparoo.

Yo can run all these tests:

$ npm install

$ npm run all

Test Suites: 2 failed, 3 passed, 5 total

Tests: 4 failed, 36 passed, 40 total

Snapshots: 0 total

Time: 2.238 sOr run just one algorithm's tests:

$ npm install

$ npm run dbtime

Test Suites: 1 passed, 1 total

Tests: 8 passed, 8 total

Snapshots: 0 total

Time: 0.818 s, estimated 1 sThere are some expected failures because some of the algorithms are not complete enough.

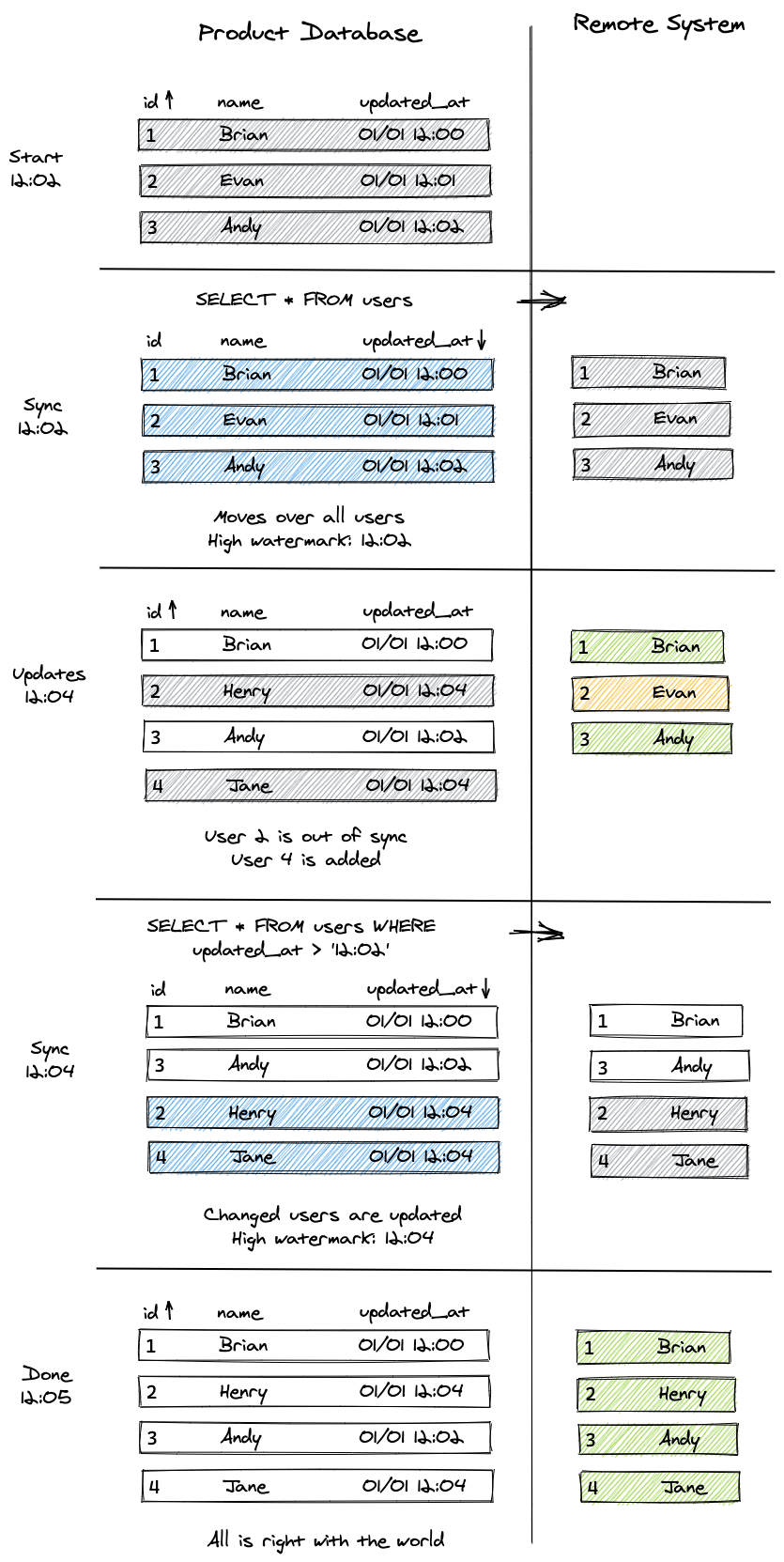

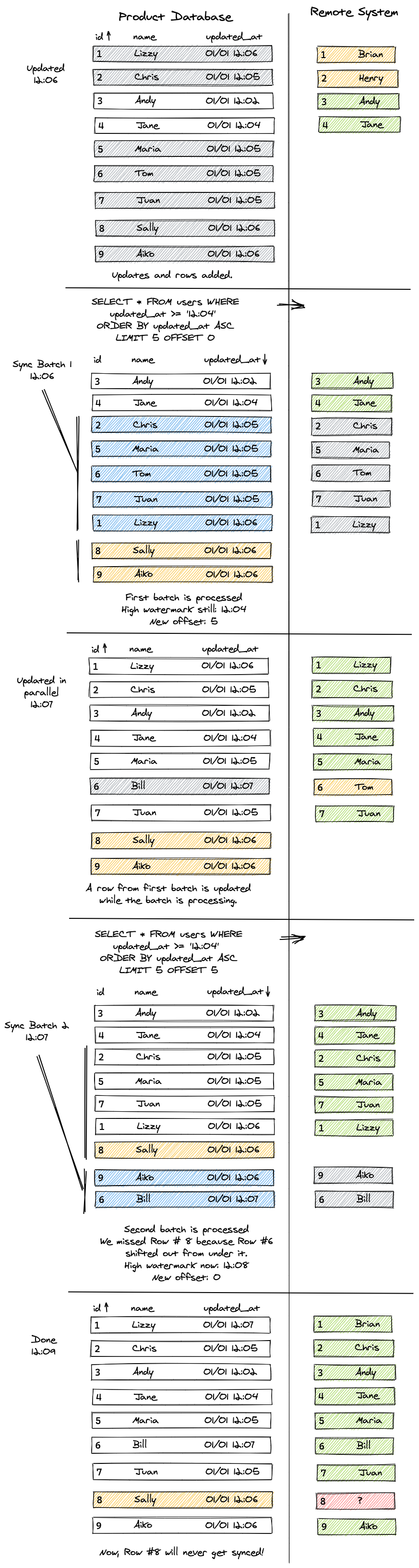

All of the current approaches do delta-based synchronization based on the updatedAt timestamp in the table.

- simple: A naive current-time-based approach with a few failures.

- dbtime: Upgrades simple to use the database times, removing race conditions. Might use too much memory.

- batch: Adds batching to save on memory, but introduces failures because of race conditions with offsets.

- steps: A hybrid of batch (most of the time) and dbtime (when there are many rows with the same timestamp).

- secondary: Adds knowledge of a auto-increment ascending column (

id) to batch without the offset or memory issues.

Is there a test (that should work) that makes some of these fail? That would be great!

The same tests are shared between all the algorithms. Feel free to add a new one.

The current suite a pretty good set of examples. You can use these methods:

create: Makes a newidgiven the primary key. Theidhas to be ascending within the current suite.update: Updates a row given theidvalue.stepTime: There is a global clock and this moves it forward. You can't go backwards!expectSync: Runs the algorithm. Fails the test if the given array of rows are not processed as expected.

Feel free to write a new algorithm, too. In general, I wrote a failing test for the current algorithm and then a new algorithm that would fix it.

Other things that are useful to know for edge cases:

- There is a batchSize that the algorithm use set to

5here. Use this in your algorithm.

I started making some pictures for the blog post.